First some history of my robot project

1) First I started with what I expected from this project:

- the robot should be easy adaptable and extendable (future proof), for both software and hardware

- learn linux (as it is more and more coming up in the SW world)

=> the robot should not be a 'small robot on wheels with microprocessor', but more a PC on wheels.

2) In an (Velleman) electronics magazine I saw an electronics project "K8000" (an I/O card that can be connected to the parallel port of a PC) and "K8005" (a stepper motor card that can be connected to the K8000). When looking at internet I found several K8000 sites, with a lot of information (a/o a Linux driver for these cards). So I bought these and soldered them.

To test them, I connected the K8000 and 2 K8005 boards to my PC (running both Windows and Linux, more about this later). The test succeeded (i.e. the boards were correctly soldered in 1 time!).

3) At the "HCC beurs" (a PC market) I found a Pentium motherboard with RAM and CPU. All I had to do is put all these boards together. From my grandmother I received a long long time ago some meccano. Ideal for this job (since one can extend it easily).

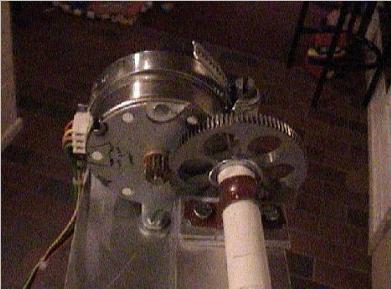

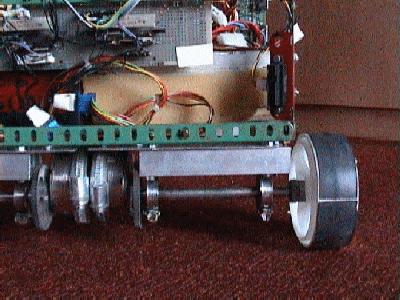

4) Now I had to add some wheels. At a model shop I found them; with some tubes etc. I was able to make 2 independent wheels (so it can turn left and right).

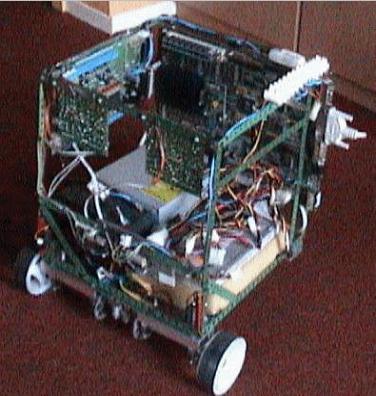

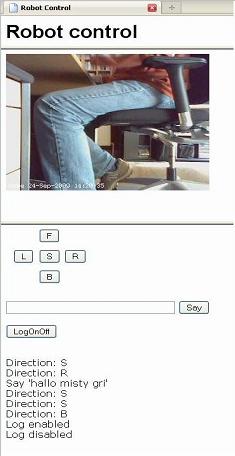

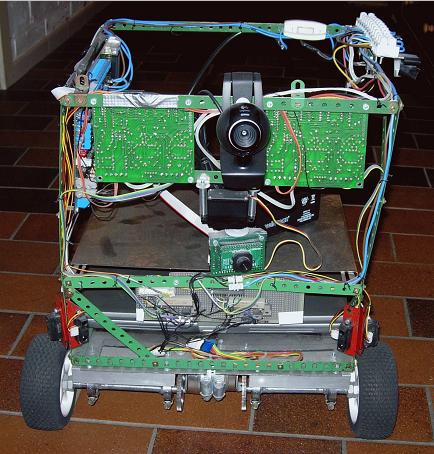

5) After putting it all together, this was the result:

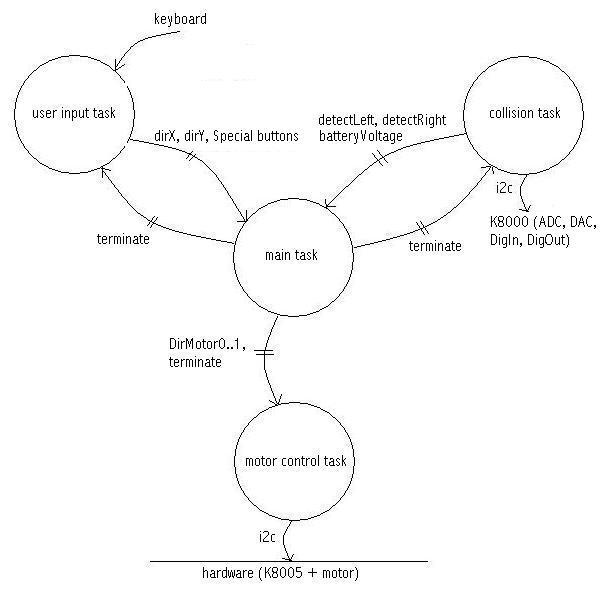

6) So now the hardware was ready, now I had to make some software for it. Because I'm a software engineer and my intension was to learn more from Linux, I made a beautiful software architecture with some processes, queues and semaphores (see more at point 16 below). After some debugging (with my first steps of DDD, the Linux debugger) I got it running (when the wheels were not touching the ground) !!! What a headaches I had with how to exit processes in a good way ;-).

But then another problem came up: the stepper motors that were delivered with the K8005 (Ming Jong ST28) were not strong enough (i.e. the torque was too low). With some help of the Yahoo K8000 community (especially Jukka Piepponen) and a colleague (Henk van Gijn) I have some clues of what the specifications of my stepper motors should be. (continued at 8.)

7) On my own PC I noticed that the RAM modules had some faults. Because I run both Windows and Linux on my own PC, and on the "PC of the robot" (also a Pentium running roughly on the same speed) only Linux and for Linux there is a BadRam patch available, I wanted to swap memory modules. Result: I blew up the motherboard of the robot (I inserted one/multiple RAM modules inverted, causing a short circuit that made the RAM socket(s) useless). So I bought a new "cheap PC" on E-Bay for the robot and installed it.

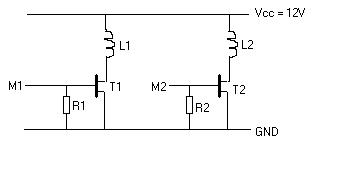

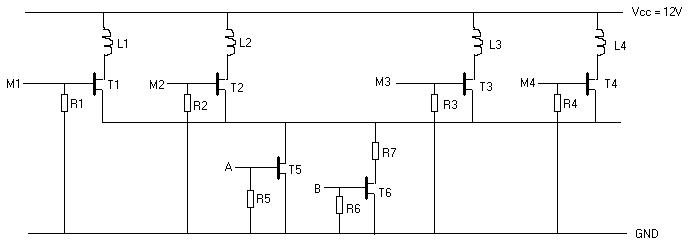

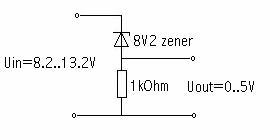

8) In a local second hand shop I saw 2 old HP Desk jet 500+ printers (very cheap: EUR 2,50 a piece). I knew there were stepper motors inside and hoped that they would be sufficient. When I opened them, there was also a gear (that I used) and a DC motor. When I put everything together the torque was not enough, but I didn't exploit the full potential of the stepper motor (a Minebea PM55L-48). My colleague Henk van Gijn made an electronic drawing to supply 12 Volts/0.6 Amp per coil. This worked, but because the motor was all the time powered, it became quite warm. So he added some extra stuff to the drawing, so the motor is only powered when the robot should drive; when it is parked the power over the motor is switched off (through a FET). This works fine.

Here are the drawings:

Simple drawing

Advanced drawing

R1..R4 = 1k8

R5, R6 = 4k7

R7 = 18 Ohm, 10 Watt

T1..T6 = BUZ73

L1..L4 are the stepper motor coils, see the K8005 manual for details.

M1..M4 are connected to the K8005

A..B are connected to the K8000 (digital output, connect them like a TTL output as described in K8000 manual). drive: A=1, B=0; park: A=0, B=1; off: A=0, B=0.

These drawings are for 1 motor, so typically you should have everything double.

9) I noticed that the wheels (as you can see in the pictures they are made of plastic) didn't have a lot of grip on the floor. To overcome that, I cut the tube of a bike and glued it with adhesive tape to the two wheels that are connected to the motors. This works very good.

10) At the moment the robot can move, but not with a lot of torque (=force) and only forward/backward (left/right is not possible). The robot wheels are not optimized (for minimal friction), so that is my next goal: adding high precision bearings (idea from Jukka Piepponen).

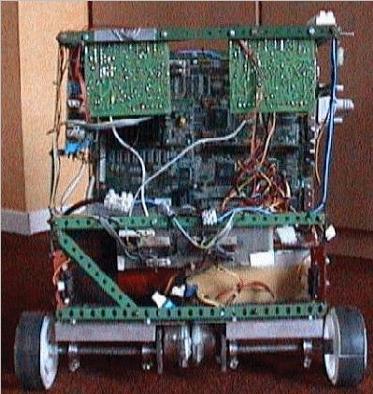

Here are two pictures of the wheels (with gear):